[Offline 강화학습 챗봇] Policy Gradient를 이용한 구현 도전기 - KoGPT2 Fine-tuning (2)

2023. 8. 20. 03:08ㆍ연구 프로젝트/강화학습 챗봇

※어디까지나 도전기일 뿐, 맞는 방법이 아니므로 따라하지 마시오.※

3. 첫 KoGPT2 Fine-tuning 도전

1) 원본 논문 코드

*학습을 위한 사용자 정의 함수

def train(input_variable, lengths, target_variable, mask, max_target_len, encoder, decoder, embedding, encoder_optimizer, decoder_optimizer, batch_size, clip, max_length=15):

# Zero gradients

encoder_optimizer.zero_grad()

decoder_optimizer.zero_grad()

# Set device options

input_variable = input_variable.to(device)

target_variable = target_variable.to(device)

mask = mask.to(device)

print("train-mask", mask[:3])

# Lengths for rnn packing should always be on the cpu

lengths = lengths.to("cpu")

...

# Perform backpropatation

loss.backward()

...

return sum(print_losses) / n_totals

def train_iters(model_name, voc, pairs, encoder, decoder, encoder_optimizer, decoder_optimizer, embedding, encoder_n_layers, decoder_n_layers, save_dir, n_iteration, batch_size, print_every, save_every, clip, corpus_name, loadFilename, hidden_size, checkpoint=None):

# Load batches for each iteration

training_batches = [batch_2_train_data(voc, [random.choice(pairs) for _ in range(batch_size)])

for _ in range(n_iteration)]

# Initializations

print('Initializing ...')

start_iteration = 1

print_loss = 0

if checkpoint:

start_iteration = checkpoint['iteration'] + 1

# Training loop

print("Training...")

for iteration in range(start_iteration, n_iteration + 1):

training_batch = training_batches[iteration - 1]

# Extract fields from batch

input_variable, lengths, target_variable, mask, max_target_len = training_batch

...

*실제 학습을 시키는 코드

voc, pairs = load_prepare_data(corpus, corpus_name, datafile, save_dir)

# Print some pairs to validate

print("\npairs:")

for pair in pairs[:10]:

print(pair)

pairs = trim_rare_words(voc, pairs, min_count=3)

# Configure models

model_name = 'cb_model'

attn_model = 'dot'

# attn_model = 'general'

# attn_model = 'concat'

hidden_size = 500

encoder_n_layers = 2

decoder_n_layers = 2

dropout = 0.1

batch_size = 64

# Set checkpoint to load from; set to None if starting from scratch

loadFilename = None

checkpoint_iter = 10000 # 4000

# Load model if a loadFilename is provided

if loadFilename:

# If loading on same machine the model was trained on

#checkpoint = torch.load(loadFilename)

# If loading a model trained on GPU to CPU

checkpoint = torch.load(loadFilename, map_location=torch.device('cpu'))

encoder_sd = checkpoint['en']

decoder_sd = checkpoint['de']

encoder_optimizer_sd = checkpoint['en_opt']

decoder_optimizer_sd = checkpoint['de_opt']

embedding_sd = checkpoint['embedding']

voc.__dict__ = checkpoint['voc_dict']

print('Building encoder and decoder ...')

# Initialize word embeddings

embedding = nn.Embedding(voc.num_words, hidden_size)

if loadFilename:

embedding.load_state_dict(embedding_sd)

# Initialize encoder & decoder models

encoder = EncoderRNN(hidden_size, embedding, encoder_n_layers, dropout)

decoder = LuongAttnDecoderRNN(attn_model, embedding, hidden_size, voc.num_words, decoder_n_layers, dropout)

if loadFilename:

encoder.load_state_dict(encoder_sd)

decoder.load_state_dict(decoder_sd)

# Use appropriate device

encoder = encoder.to(device)

decoder = decoder.to(device)

print('Models built and ready to go!')

# Configure training/optimization

clip = 50.0

teacher_forcing_ratio = 1.0

learning_rate = 0.0001

decoder_learning_ratio = 5.0

n_iteration = 500 # 4000

print_every = 1

save_every = 1500

# Ensure dropout layers are in train mode

encoder.train()

decoder.train()

# Initialize optimizers

print('Building optimizers ...')

encoder_optimizer = optim.Adam(encoder.parameters(), lr=learning_rate)

decoder_optimizer = optim.Adam(

decoder.parameters(), lr=learning_rate * decoder_learning_ratio)

if loadFilename:

encoder_optimizer.load_state_dict(encoder_optimizer_sd)

decoder_optimizer.load_state_dict(decoder_optimizer_sd)

# If you have cuda, configure cuda to call

for state in encoder_optimizer.state.values():

for k, v in state.items():

if isinstance(v, torch.Tensor):

state[k] = v.cuda()

for state in decoder_optimizer.state.values():

for k, v in state.items():

if isinstance(v, torch.Tensor):

state[k] = v.cuda()

# Run training iterations

print("Starting Training!")

train_iters(model_name, voc, pairs, encoder, decoder, encoder_optimizer, decoder_optimizer,

embedding, encoder_n_layers, decoder_n_layers, save_dir, n_iteration, batch_size,

print_every, save_every, clip, corpus_name, loadFilename, hidden_size)-Seq2seq 모델 구조 정의 및 해당 모델을 이용해 지도 학습 먼저 수행

2) KoGPT2 학습을 위한 학습 데이터

*KoGPT2 fine-tuning 첫번째 시도 때는 실제 참고 논문에서 사용한 데이터 쪼개기 방식이 아니라, 각 대화 내에서 두 문장씩 아예 짝을 지어 데이터를 쪼갬

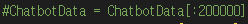

-이러한 구성의 데이터 200,000개 이용해 학습

3) KoGPT2 학습 코드

print ("Initial training start")

loss_list = []

model.train()

for epoch in range(2):

for batch_idx, samples in enumerate(train_dataloader):

if batch_idx % 500 == 0:

print(f"epoch: {epoch}", f"batch_idx: {batch_idx}")

optimizer.zero_grad()

token_ids, mask, label = samples

out = model(token_ids)

out = out.logits #Returns a new tensor with the logit of the elements of input

mask_3d = mask.unsqueeze(dim=2).repeat_interleave(repeats=out.shape[2], dim=2).to(device)

mask_out = torch.where(mask_3d == 1, out, Sneg * torch.ones_like(out)).to(device)

loss = criterion(mask_out.transpose(2, 1), label).to(device)

avg_loss = loss.sum() / mask.sum()

loss_list.append(avg_loss.cpu())

avg_loss.backward()

# 학습 끝

optimizer.step()

result_loss = int(sum(loss_list)/len(loss_list))

torch.save({"model_state_dict": model.state_dict(), "optimizer_state_dict": optimizer.state_dict()}, f"./train_KoGPT2/{epoch}_kogpt2_checkpoint.pt")

print ("end")*매 epoch 마다 학습된 KoGPT2 모델 저장

4) KoGPT2 추가 학습 진행

# TOKENIZER 불러오기

TOKENIZER = PreTrainedTokenizerFast.from_pretrained("skt/kogpt2-base-v2",

bos_token=BOS, eos_token=EOS, unk_token="<unk>",

pad_token=PAD, mask_token=MASK)

# 마지막으로 학습한 KoGPT2 모델 불러오기

model = GPT2LMHeadModel.from_pretrained("skt/kogpt2-base-v2")

model.resize_token_embeddings(len(TOKENIZER))

checkpoint = torch.load("./train_KoGPT2/4_kogpt2_checkpoint.pt")

model.load_state_dict(checkpoint["model_state_dict"])

max_length = 64

model.to(device)

model.train() # 모델 학습 모드로 설정

# 학습 데이터

train_set = ChatbotDataset(ChatbotData, max_len=64)

train_dataloader = DataLoader(train_set, batch_size=32, num_workers=0, shuffle=True, collate_fn=collate_batch)

# 학습률 및 손실 함수와 최적화 함수 정의

learning_rate = 3e-5

criterion = torch.nn.CrossEntropyLoss(reduction="none").to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

optimizer.load_state_dict(checkpoint["optimizer_state_dict"])

Sneg = -1e18

# 이미 이전에 학습되어 저장된 모델을 이용해 추가 학습 진행

print ("Additional Epoch start")

for epoch in range(2):

for batch_idx, samples in enumerate(train_dataloader):

if batch_idx % 50 == 0:

print(f"epoch: {epoch}", f"batch_idx: {batch_idx}")

optimizer.zero_grad()

token_ids, mask, label = samples

out = model(token_ids)

out = out.logits #Returns a new tensor with the logit of the elements of input

mask_3d = mask.unsqueeze(dim=2).repeat_interleave(repeats=out.shape[2], dim=2).to(device)

mask_out = torch.where(mask_3d == 1, out, Sneg * torch.ones_like(out)).to(device)

loss = criterion(mask_out.transpose(2, 1), label).to(device)

avg_loss = loss.sum() / mask.sum()

avg_loss.backward()

# 학습 끝

optimizer.step()

torch.save({"model_state_dict": model.state_dict(), "optimizer_state_dict": optimizer.state_dict()}, f"./train_KoGPT2/{epoch+5}_kogpt2_checkpoint.pt")

print ("end")*코랩 런타임 에러 및 메모리 에러, 혹은 노트북 과부하를 막기 위해 한 번에 학습을 진행하지 않고 epoch을 1 혹은 2씩 쪼개서 학습 진행

5) 챗봇 성능 평가

*총 5번의 epoch 만큼 학습을 진행한 후, 챗봇 성능 평가

*챗봇의 성능이 아직 매우 미숙함

'연구 프로젝트 > 강화학습 챗봇' 카테고리의 다른 글

| [Offline 강화학습 챗봇] Policy Gradient를 이용한 구현 도전기 - 강화학습 (2) (2) | 2023.08.24 |

|---|---|

| [Offline 강화학습 챗봇] Policy Gradient를 이용한 구현 도전기 - 강화학습 (1) (0) | 2023.08.24 |

| [Offline 강화학습 챗봇] Policy Gradient를 이용한 구현 도전기 - KoGPT2 Fine-tuning (1) (0) | 2023.08.20 |

| [강화학습] Q-Learning vs. Policy Gradient Method (0) | 2023.07.20 |

| 강화학습을 우울함 해소 챗봇에 적용한다면? (0) | 2023.07.16 |